Видео с ютуба Optimizing Llm Accuracy

RAG vs Fine-Tuning vs Prompt Engineering: Optimizing AI Models

Context Optimization vs LLM Optimization: Choosing the Right Approach

Advanced RAG techniques for developers

A Survey of Techniques for Maximizing LLM Performance

RAG vs. Fine Tuning

Fine-Tuning LLMs for RAG: Boost Model Performance and Accuracy

Deep Dive: Optimizing LLM inference

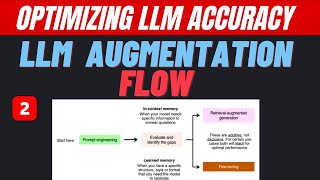

2. LLM Augmentation Flow | Optimizing LLM Accuracy

Prompt Optimization Techniques for a 100x LLM Boost | LIVE Coding Colab | OpenRouter API

2 Methods For Improving Retrieval in RAG

LLM Optimization vs Context Optimization: Which is Better for AI?

LangChain RAG: Optimizing AI Models for Accurate Responses

![LLM Optimization Techniques You MUST Know for Faster, Cheaper AI [TOP 10 TECHNIQUES]](https://ricktube.ru/thumbnail/iAfAXS1PRNU/mqdefault.jpg)

LLM Optimization Techniques You MUST Know for Faster, Cheaper AI [TOP 10 TECHNIQUES]

EASIEST Way to Fine-Tune a LLM and Use It With Ollama

Optimize Your AI - Quantization Explained

Evaluating the Output of Your LLM (Large Language Models): Insights from Microsoft & LangChain

Rajarshi Tarafdar | Optimizing LLM Performance: Scaling Strategies for Efficient Model Deployment

RHEL AI: Best Practices And Optimization Techniques To Achieve Accurate Custom LLM - DevConf.IN 2025

LangWatch LLM Optimization Studio

LLM Optimization LLM Part 3 - Improve LLM accuracy with GraphDB